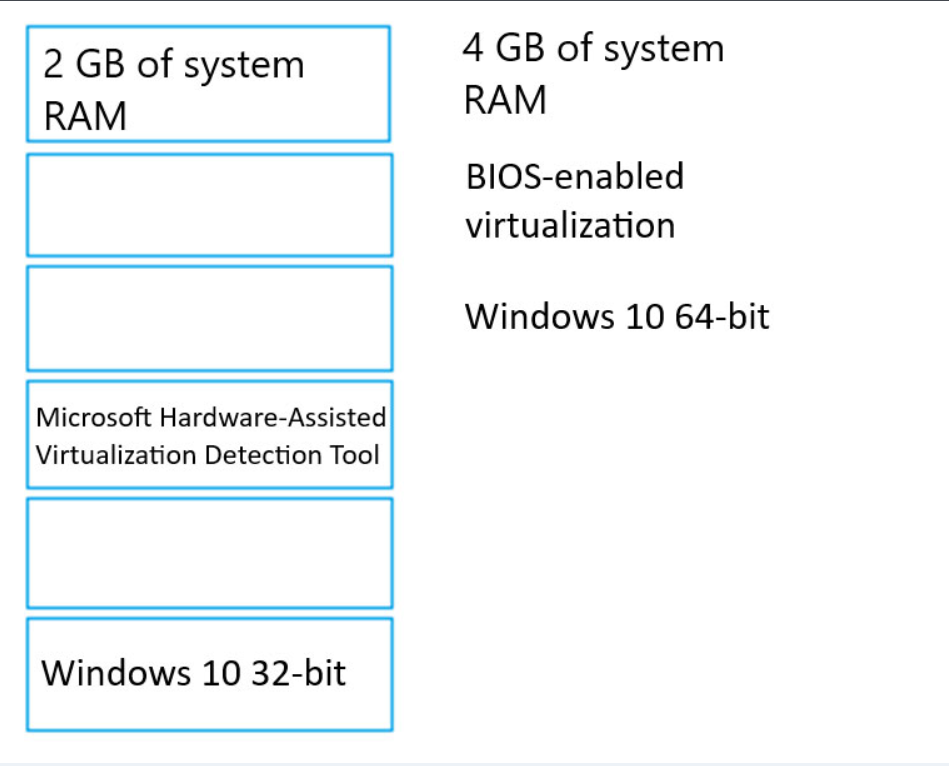

You need to consider the underlined segment to establish whether it is accurate.

To transform a categorical feature into a binary indicator, you should make use of the Clean Missing Data module.

Select `No adjustment required` if the underlined segment is accurate. If the underlined segment is inaccurate, select the accurate option.

A.

No adjustment required.

B.

Convert to Indicator Values

C.

Apply SQL Transformation

D.

Group Categorical Values

Answer: B

✅ Explanation

✅ Analysis:

Clean Missing Data is used for detecting and handling missing or null values, not for encoding categories.

To transform (encode) categorical values into binary indicators (dummy variables), you must use:

✅ Convert to Indicator Values

Therefore, the underlined segment is inaccurate.